Livecode improvisation with Anne-Liis Poll

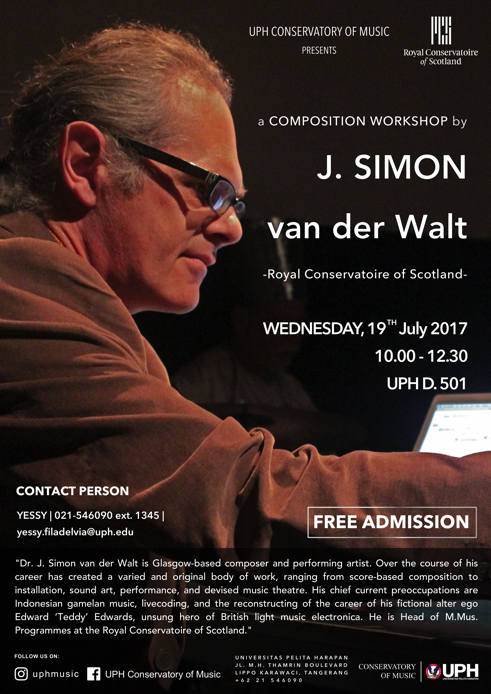

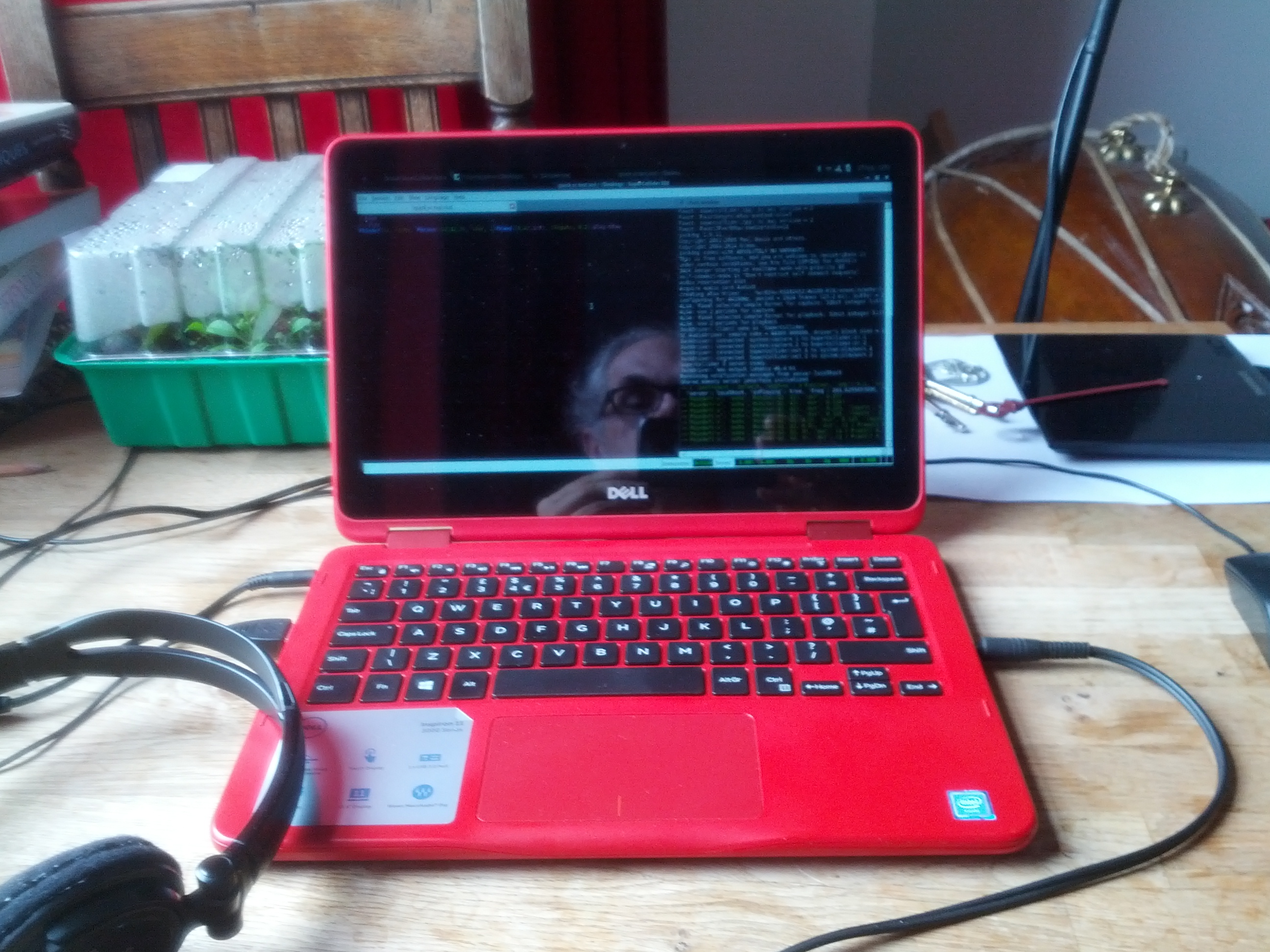

As part of the team that organised the third METRIC Improvisation Intensive at the Royal Conservatoire of Glasgow, I did not have as much time as I might have liked to improvise myself. I was pleased however to be joined for an impromptu livecoded session by Anne-Liis Poll, Professor of Improvisation at the Estonian Academy of Music and Theatre:

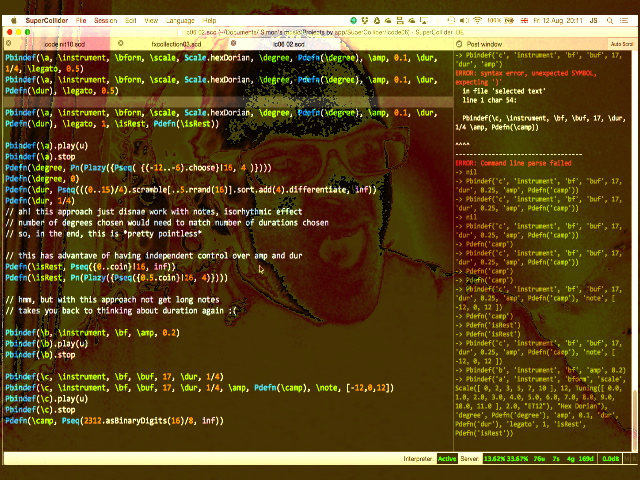

This did not quite turn out the way I had intended! In recent work I have been looking to find a way to respond in code to live human improvisations: this session turned into more of an algorave-ish groove built up from mechanical trumpet sounds, over which Anne-Liis worked with the voice. Even so, this was quite succesful. I hope to do more playing with other people along these lines.