Livecoding Audacity

Had a slightly crazy idea for the TidalClub Night Stream on 2022-12-21 – could I livecode something in Audacity?!?

Obviously the answer is basically no, as you can't edit anything in Audacity while it is playing. So, instead, the plan is to use some of the things in the Generate menu to improvise some sounds: the Risset Drum, Rhythm Track, DTMF Tones and so on.

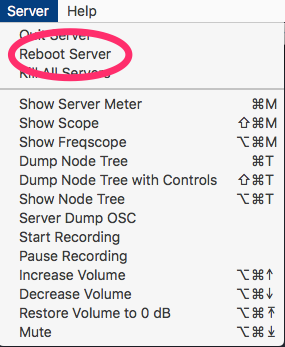

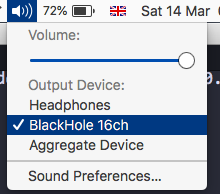

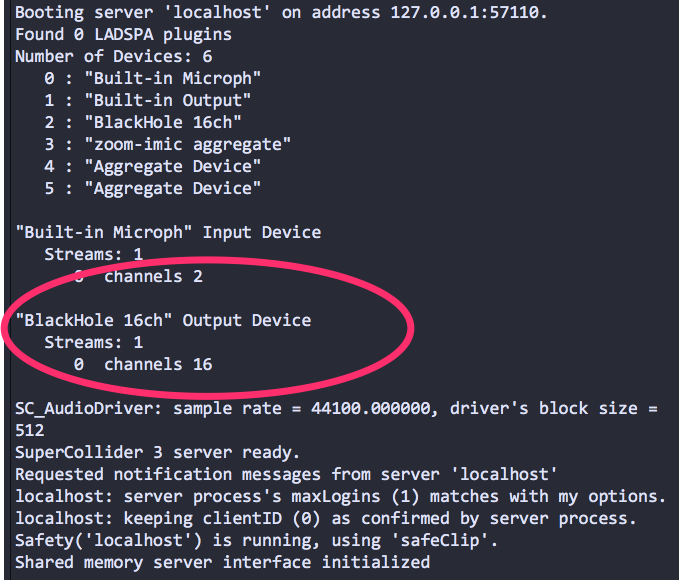

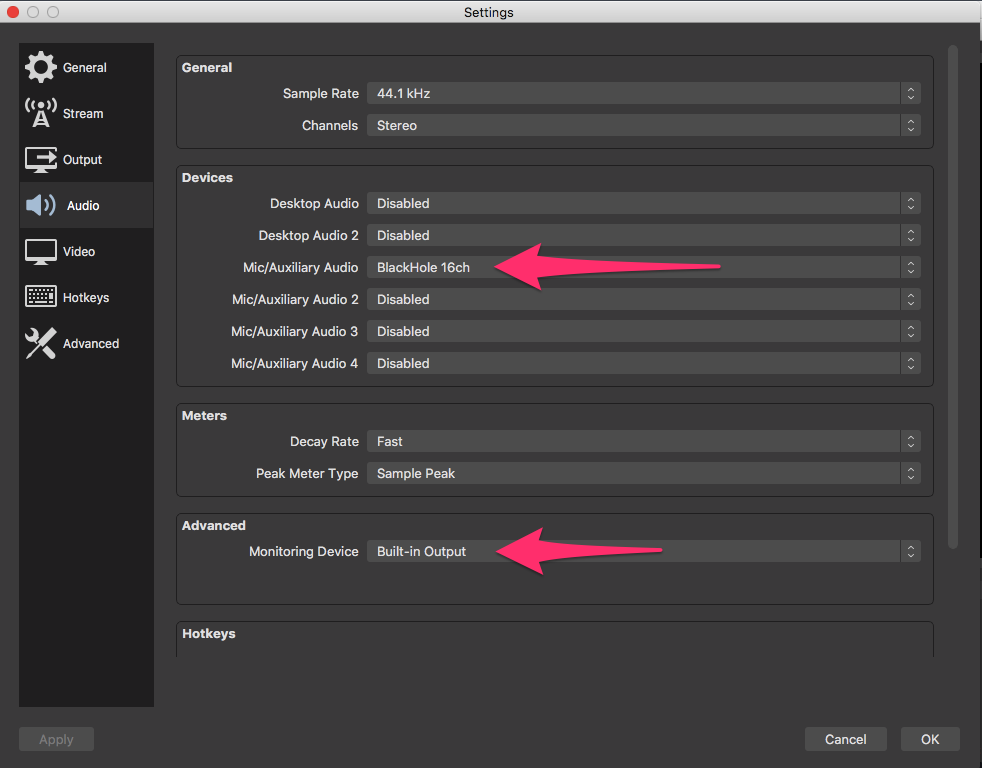

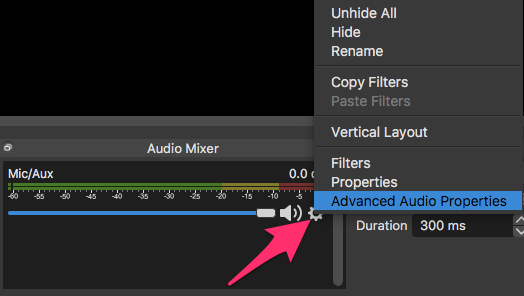

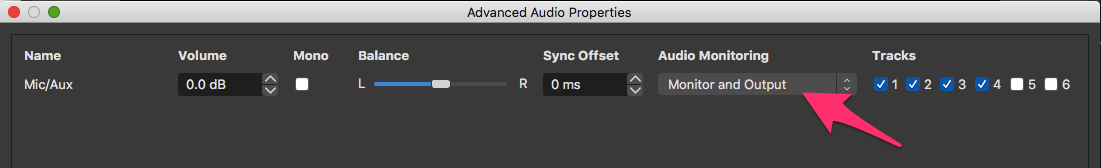

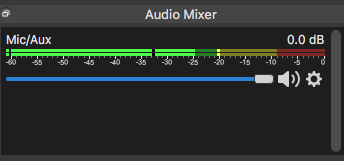

Once I have a minute's worth I, save out a mixdown to disk. Then, I have a script running in SuperCollider that waits a minute, then reloads the latest file exported from Audacity. So, in theory, I have a minute to create the next layer in Audacity while the last layer is playing.

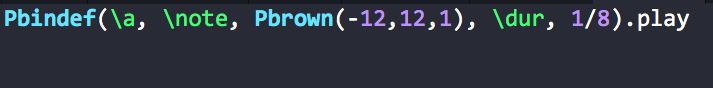

For some reason, I decided to bill the performance as a 'dangdut', so I also have some simple algorithmically generated dangdut-ish material ready to go in SuperCollider that can be played alongside the much more abstract Audacity material.

Quick proof-of-concept video:

]

]

]

]